Make Sensitivity Analyses Work for You.

Senstitivity analyses can be an incredibly useful tool to aid in model diagnostics, insights about model specification, and more. Learn about how we think about sensitivity analyses and when to use them.

Have you ever wondered if the model you are estimating is spurious or overly influenced by a small specification or data condition? If so, sensitivity analyses can help answer these questions. Models are tools we use to understand a phenomenon or process whose true mechanisms we don’t fully know. Because of this, our models are always simpler than the phenomenon itself. As George E.P. Box famously noted:

All models are wrong, but some are useful.

This highlights the reality that models represent data-generating processes as closely as possible, but none fully capture the true underlying mechanism.

Sensitivity analyses help us understand how much results change when we adjust model specifications or data inputs. They are especially valuable in exploratory research based on non-experimental data. The goal is to assess how much the primary findings shift when we alter model assumptions or inputs. For example, one common approach is to estimate the model with and without extreme values.

This post covers when to use sensitivity analyses and best practices for conducting them.

What Do We Mean by Sensitivity Analyses?

Sensitivity analyses test how much model results change when we alter assumptions, vary inputs, or adjust the data being used. The goal is to determine whether these changes meaningfully affect how we interpret the results. In short, they explore the robustness of primary findings.

While sensitivity analyses overlap with validation or robustness checks, they are broader in scope. Validation often focuses on accuracy, while robustness checks look at consistency across models. Sensitivity analyses encompass both, but also explicitly test how fragile or stable results are under different assumptions.

Why Sensitivity Analyses Matter

Sensitivity analyses can reveal several issues with model results:

+ Fragile parameter estimates: Parameter estimates that change drastically, sometimes even flipping signs, when we include different predictors or interactions. + Model structures, data conditions, or estimation techniques influencing results: For example, error variance heterogeneity or different correlation structures in time-series or longitudinal data. + Data points influencing model results: Extreme values strengthening or weakening the model results. I like to call data extreme values rather than outliers and save the term outliers for those that are actually not part of the desired population. If they are outliers, then I can justify removing them, but if they are extreme values, I am not able to justify their exclusion.

By ruling out these sources of instability, we can build confidence in the results. Below, I share common sensitivity analyses I run and how I conduct them.

Different Flavors of Sensitivity Analyses

I often use sensitivity analyses to test the impact of extreme data points and of different data conditions or estimation techniques.

Extreme values

To assess their impact, I fit a model with all the data (including extreme values) and compare it to one with those values removed. In many cases, results change little, but exploring these differences is essential. Excluding extreme values can shrink or amplify effect sizes, changing their practical implications.

Data conditions and estimation techniques

I also fit the same model under different assumptions. For example, to test whether error heterogeneity affects results, I compare a model that assumes constant error variance with one that relaxes this assumption. I then assess whether the results differ in meaningful ways.

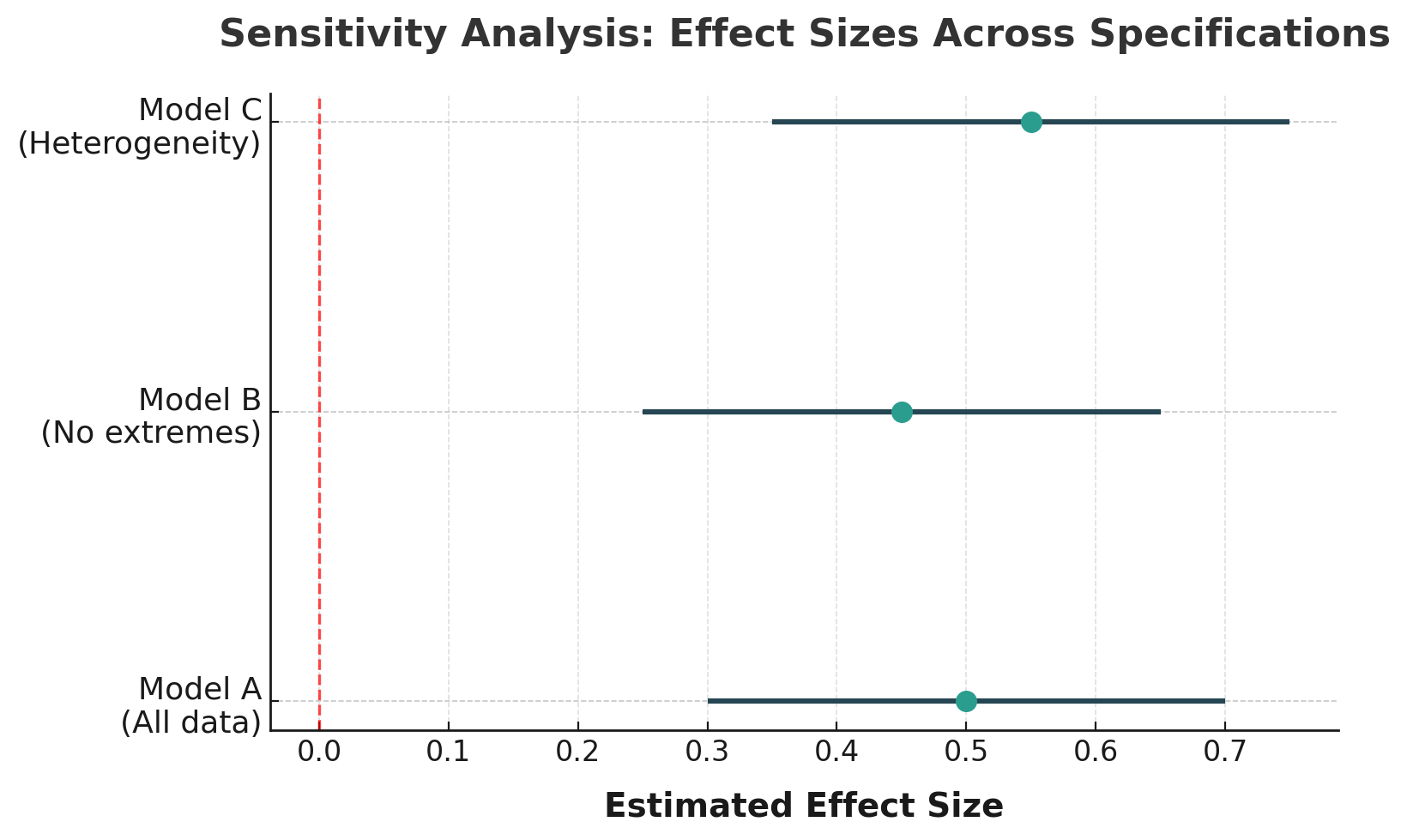

Evaluating Differences

I use visualizations and tables to compare model specifications side by side. This often highlights that standard errors change more than parameter estimates when we vary assumptions about the data. The comparison plot example shows three different results, where the dot is the paramter estimate and the bars represents the 95% confidence interval for each approach.

When communicating results, I focus less on statistical significance and more on whether conclusions or recommendations shift. For example, if an effect size drops from medium to small across sensitivity analyses, that change matters for policy or future research decisions. In the best case, effect sizes remain consistent in both direction and magnitude across analyses, suggesting robust results.

Common Pitfalls to Avoid

Although sensitivity analyses are powerful, several pitfalls can undermine their usefulness:

- Running too many analyses: This risks “fishing” for a preferred result.

- Selective reporting: Failing to disclose what was tested weakens credibility.

- Treating them as an afterthought: Sensitivity checks should be part of the analytic plan, not an optional add-on.

- Assuming they fix poor design: No sensitivity analysis can turn an observational study into a causal one.

Transparency is essential. Share code whenever possible, or at least provide detailed descriptions of the primary analysis and all sensitivity analyses performed. Many sensitivity checks can even be planned before data collection, such as identifying likely model assumptions or alternative specifications.

In Summary

Sensitivity analyses are valuable for more than academic research — they strengthen all types of analytics. By testing how sensitive key results are to modeling choices or data inputs, we can increase confidence in findings, identify areas that need further evidence, and provide stronger empirical support for decision-making.

Next time you run a model, ask: How sensitive are my results?